Building Faster, More Reliable Agents with Sequential Retrieval-Augmented Generation

By Adrian H. Raudaschl in TDS Archive

Oct 28, 2024 · 13 min read

The Wonderful World of SeqRAG. Illustration by author.

Member-only story

SeqRAG: Agents for the Rest of Us

Building Faster, More Reliable Agents with Sequential Retrieval-Augmented Generation

AI agents have great potential to perform complex tasks on our behalf. Still, despite advances like OpenAI's o1 reasoning foundation models or even the more recent Claude "Computer User" feature, it's clear that realising this potential in a reliable, everyday, helpful manner is going to be challenging. In fact, it's been challenging for a while.

Back in 1997, Steve Jobs was asked at the 1997 Apple Developer Conference, "How can we get computers to work for people instead?" he acknowledged the challenges of AI agents at the time.

"To bet our future right now on the results of research into the agent world… would be foolish," Jobs cautioned before adding, "I think at some point they're (agents) going to start doing more for us in ways we can't imagine." — Jobs 2007.

His reaction is worth watching here.

Jobs contemplating agent-like solutions at the 1997 Apple Developer Conference.

Jobs contemplating agent-like solutions at the 1997 Apple Developer Conference.

The journey to reliable AI agents is an exciting space to work in right now, especially as researchers increasingly find ways of crafting more capable agents capable of solving multi-step tasks. And while adaptive methods, like swarm-based or memory-enabled approaches, offer exciting possibilities, they also introduce unpredictability.

What we need is a solution that can handle a wide array of complex tasks with minimal intervention, allowing us to accomplish more with less effort.

The goal is to think of it as having a full-time assistant that can handle complex tasks in minutes. Imagine being able to:

- Plan a holiday to Paris, booking flights, accommodations, and activities for the whole family in one go.

- Conduct a competitive analysis of a product and develop an evidence-based strategic approach.

- Create a comprehensive, personalized care plan for an elderly diabetic patient.

- Optimise an investment portfolio according to specific risk tolerances and real-time market conditions, handling all the research and actions automatically.

- Review and analyze contracts, proposals, or historical rulings to support a legal dispute, without the usual manual searching, reading, and decision-making.

Pulling this off requires a system that employs multi-step planning, access to varied information sources, and the ability to make decisions independently. Tools like Perplexity AI's Pro mode, I believe, have already made some significant advancements in this space.

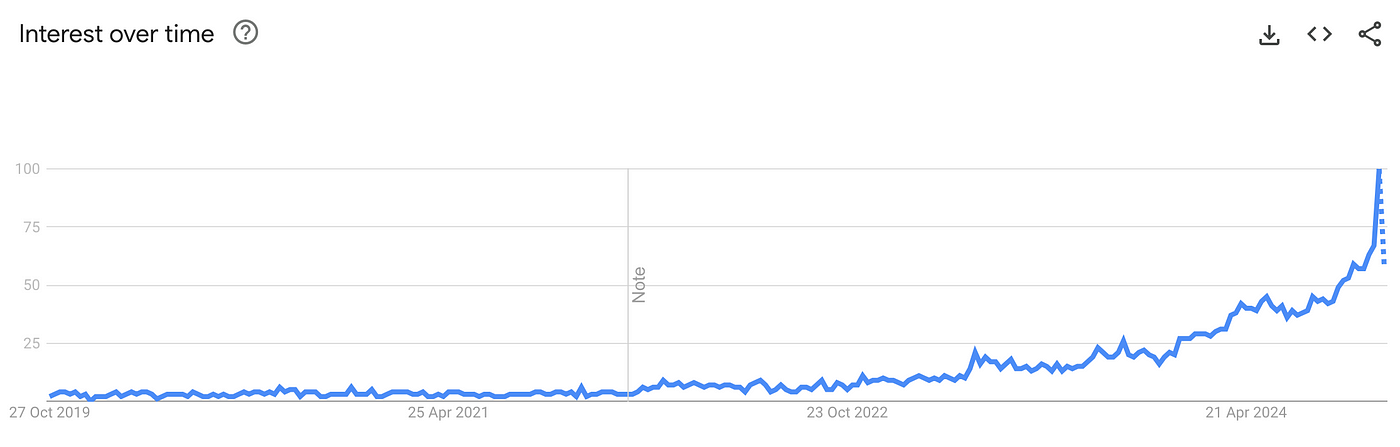

Google search interest for "AI agents" has skyrocketed in recent years, reflecting the growing excitement in this field. Screenshot by author from Google Trends Oct 2024.

Google search interest for "AI agents" has skyrocketed in recent years, reflecting the growing excitement in this field. Screenshot by author from Google Trends Oct 2024.

We have spent months experimenting with promising AI agent frameworks in multiple complex, multi-API environments. We've explored the most promising candidates, including Microsoft's AutoGen, which enables multi-agent conversations; ReAct, which combines reasoning and acting; PlanRAG, which focuses on iterative planning and retrieval; and many others, from OpenAI's assistant tech to robotics.

While these methods show promise and progress in developing AI agents, more work is required for production use. The strongest candidate I have seen to date is ChatGPT o1, but even that model will require a framework to be able to execute complex functions in real-world scenarios.

The Limitations of Current LLM-based Agents

- Unpredictability: Most current AI frameworks lack determinism in their outputs and, therefore, do not behave predictably on multi-step tasks.

- Complex Debugging: Tracing decision paths is a nightmare in complex systems where many agents interact with one another.

- High Computational and Time-Wasting Costs: Continuous replanning is resource-intensive and takes lots of time.

- Production Challenges: Making such agents run reliably and performantly within a production environment is challenging.

We find Agent-based LLMs Perform Best When…

- Given a High-Level Outline: They do well when given a high-level overview that is detailed and planned out.

- Focused on One Task at a Time: They will perform better if they can focus on one component or piece of the project at a time.

- Provided Clear Context and Instructions: They would do a better job if, at each step, context and instructions were given in more detail.

Sequential Retrieval-Augmented Generation (SeqRAG) is a framework we designed to address these challenges. By prioritising a structured, step-by-step approach that adds incremental detail to instructions, SeqRAG aims to make complex problem-solving in AI agents more achievable.

SeqRAG: Technical Architecture and Implementation

Those eager to dive into the prompts and code and skip the detailed explanations can start experimenting with SeqRAG here.

SeqRAG's structured approach to problem-solving emphasises building in the detail and complexity progressively, which in turn helps the model focus on performing its focused tasks faster and more reliably with higher quality outputs at the end.

The logic behind SeqRAG is to establish the order of tools and their settings at the outset and then enhance and fine-tune them as you progress through each stage.

It's like sketching an outline of a portrait and gradually adding more details, colours, and outlines, like in Picasso's Le Taurea. But in our case, we begin with an overview of our goals and the broad steps a system needs to take to achieve them. Then, we progressively add details at each step to ensure we have the precision needed for each tool to reach its objective.

Four primary components comprise SeqRAG's architecture, each optimised for a specific role described below.

SeqRAG Architecture Overview

SeqRAG's architecture is based on four specialised agents, each responsible for a specific function within SeqRAG.

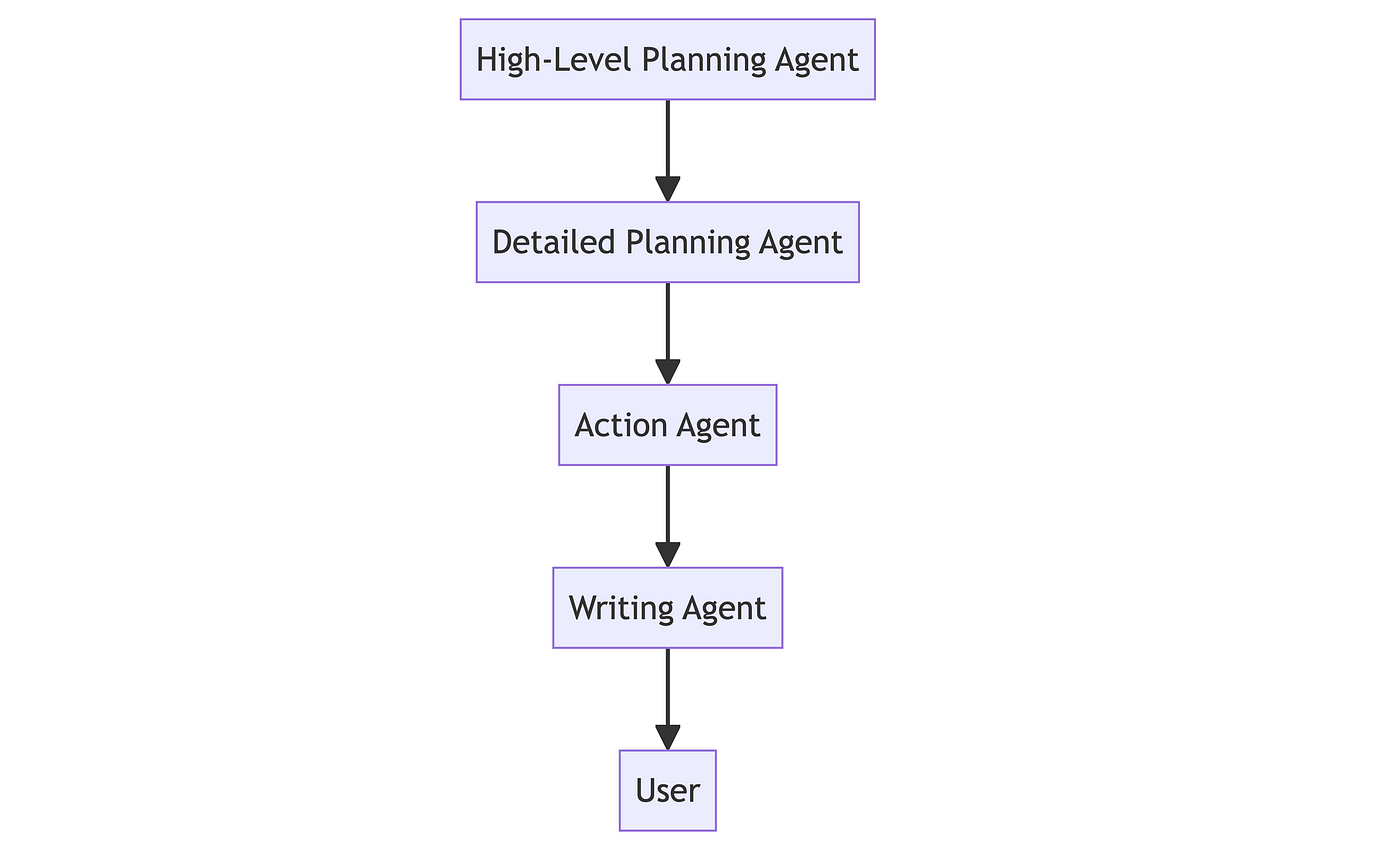

At the top level is the High-Level Planning Agent (HLPA), which generates a broad, step-by-step plan to guide the process and considers any useful information from the conversation history. Then, the Detailed Planning Agent (DPA) refines this high-level plan by selecting specific tools and defining necessary parameters. Next, the Action Agent (AA) executes each step in sequence, retrieves the results, and stores them for context in subsequent action steps. Finally, the Writing Agent (WA) combines responses from each completed step to produce a coherent response in your desired format.

Hierarchical AI Agent Structure: From High-Level Planning to User Interaction. Image by author.

Hierarchical AI Agent Structure: From High-Level Planning to User Interaction. Image by author.

So that's a high-level overview, and you may ask yourself, "Why take this sequential approach and not an adaptive one like everyone else? It seems too simple, no?"

Our experimentation with recursive, multi-communicating agent models like AutoGen revealed the complexity and variability these models introduced, which can be difficult to manage in production. SeqRAG offers a simpler approach that might suit tasks where reliability is more critical than flexibility.

Let's get into the details of SeqRAG's four agents.

Component Details and Rationale

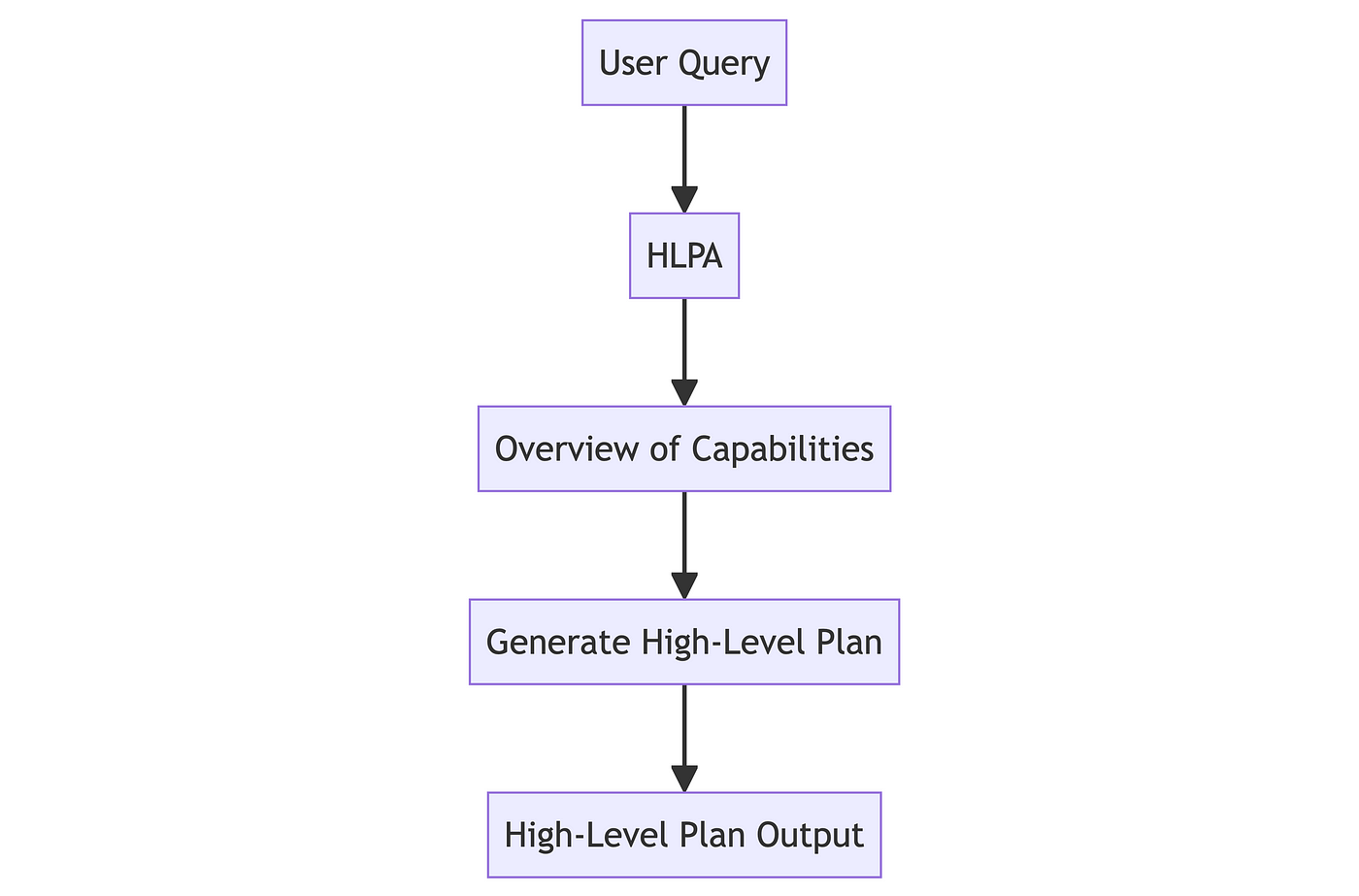

1. High-Level Planning Agent (HLPA)

The High-Level Planning Agent (HLPA) system prompt includes information about the types of data it can access, the logical sequence it should follow, and any inherent limitations or restrictions of the system it is planning for. The output is a high-level plan that considers relevant information from the user's conversation history, query and what information from early steps will be required to complete steps later in the plan.

- Function: Creates a broad plan outlining necessary steps without tool specifics.

- Implementation: Utilises prompt engineering to delineate available APIs, tools, and information sources.

- Model Choice: Smaller models (e.g., LLaMA 8B or GPT-3.5/4o-mini) are sufficient for rapid iteration and testing.

- Output: JSON-structured high-level plan.

High-Level Planning Agent (HLPA) Workflow. Image by author.

High-Level Planning Agent (HLPA) Workflow. Image by author.

Example Input: 'Plan a week-long trip to Paris for two people, including flights, accommodation, and activities.'

Example Output:

{ "steps": [ {"step": "Flight Booking", "description": "Find and book flights to Paris." }, {"step": "Accommodation Booking", "description": "Search for and reserve accommodations in Paris." }, {"step": "Itinerary Planning", "description": "Create a day-by-day itinerary of activities and attractions to visit." }, {"step": "Transportation Arrangements", "description": "Arrange local transportation options (e.g., metro, taxis)." }, {"step": "Packing List", "description": "Generate a packing list based on the planned activities and weather forecast."} ]}

Rationale: This step abstracts the overall problem-solving process, allowing for more focused and efficient planning in subsequent stages. Because the Detailed Planning Agent will follow this high-level plan, it provides a quick way to check the overall logic during debugging without having to wait for the entire process to finish.

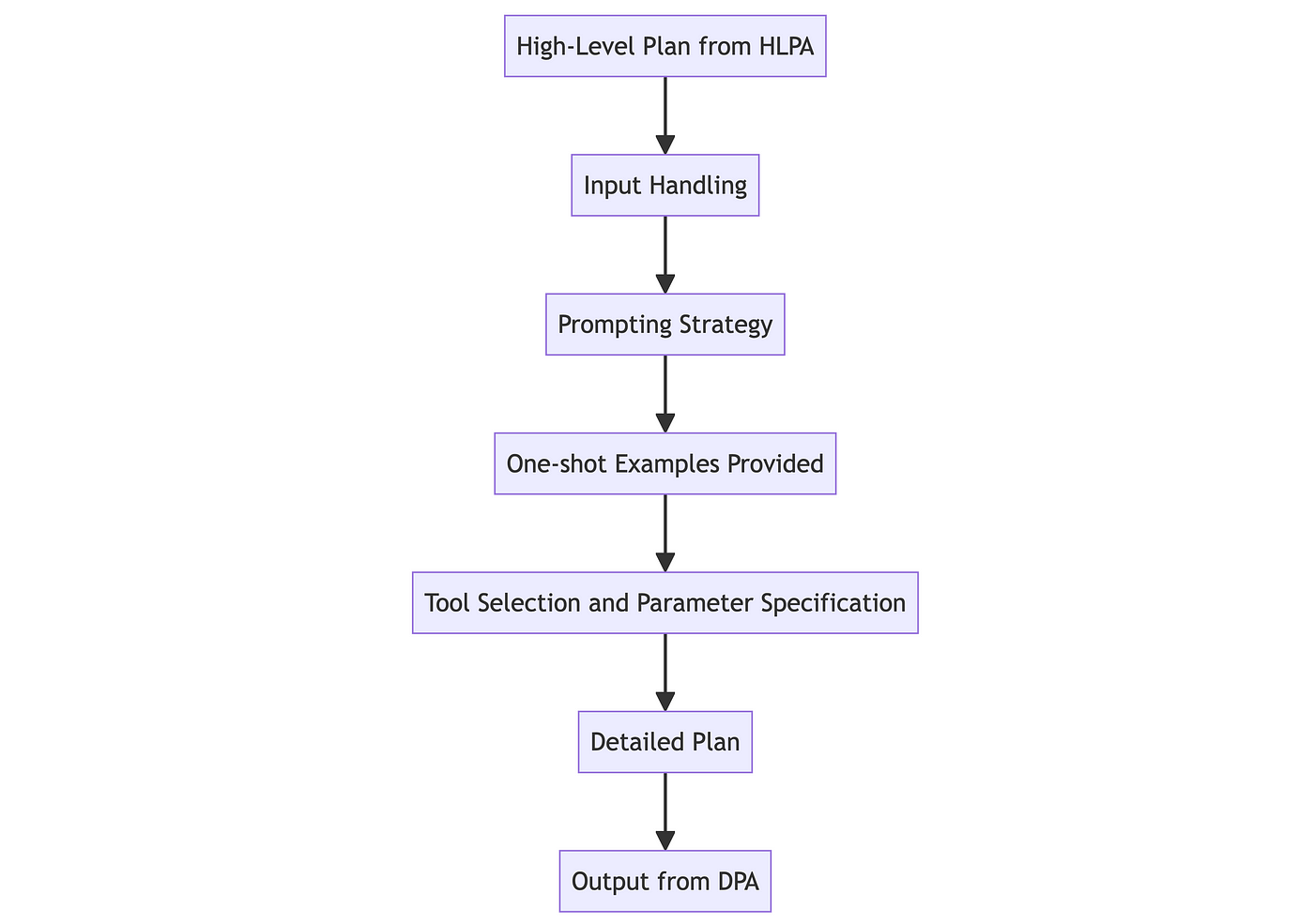

2. Detailed Planning Agent (DPA)

The Detailed Planning Agent (DPA) receives the high-level plan generated by the HLPA. With this as a guide, the DPA's prompt incorporates comprehensive information about each available tool, including specific usage instructions and the parameters available.

This structured approach helps the DPA refine the plan, select tools and add parameters, taking into account the outputs of prior steps to ensure each subsequent action builds logically on the last.

Additionally, one-shot examples are provided to guide the model in creating accurate specifications, enabling the DPA to deliver a detailed, actionable set of instructions to be executed.

Detailed Planning Agent (DPA) Workflow. Image by author.

Detailed Planning Agent (DPA) Workflow. Image by author.

- Function: Expands the high-level plan by specifying the exact tools and parameters needed for each step, ensuring the entire plan is logical and contextually aware of the outputs of all prior steps.

- Input: Receives the high-level plan from HLPA.

- Model Choice: Utilises larger, more capable models to manage the complexity of tool selection and parameter specification. (e.g., GPT4o+, o1, Claude 3.5+, Llama 3+ 70B etc).

- Output: Produces a detailed, contextual JSON plan with specific tools and parameters for each step.

Example Output:

{ "steps": [ { "step": "Flight Booking", "tool": "FlightSearchAPI", "parameters": { "departure": "London", "destination": "Paris", "date": "2024-08-01", "class": "Economy" }, "output": { "confirmation_number": "ABC123" } }, { "step": "Accommodation Booking", "tool": "HotelBookingAPI", "parameters": { "location": "Paris", "check_in": "2024-08-01", "check_out": "2024-08-07", "guests": 2, "reference": "ABC123"// Using output from previous step }, "output": { "reservation_id": "HOTEL456" } }, { "step": "Itinerary Planning", "tool": "ItineraryPlanner", "parameters": { "destination": "Paris", "interests": ["museums", "restaurants", "historical sites"], "days": 6, "hotel_reservation_id": "HOTEL456" // Using output from previous step } }, { "step": "Transportation Arrangements", "tool": "LocalTransportAPI", "parameters": { "city": "Paris", "options": ["metro", "taxi", "bus"] } }, { "step": "Packing List", "tool": "PackingListGenerator", "parameters": { "destination": "Paris", "duration": 6, "season": "summer", "activities": ["sightseeing", "dining"] } } ]}

Rationale: Taking this step out of HLPA makes the planning more specific and enables the planning to be finer and more coherent. This will distribute the cognitive load across different systems, each of them playing to their strengths. By providing comprehensive details and examples in the prompts, one ensures that the DPA generates the specifications with accuracy. Also, during each step, the contextual awareness of previous steps' outputs can allow the generation of a more coherent and logical plan.

3. Action Agent (AA)

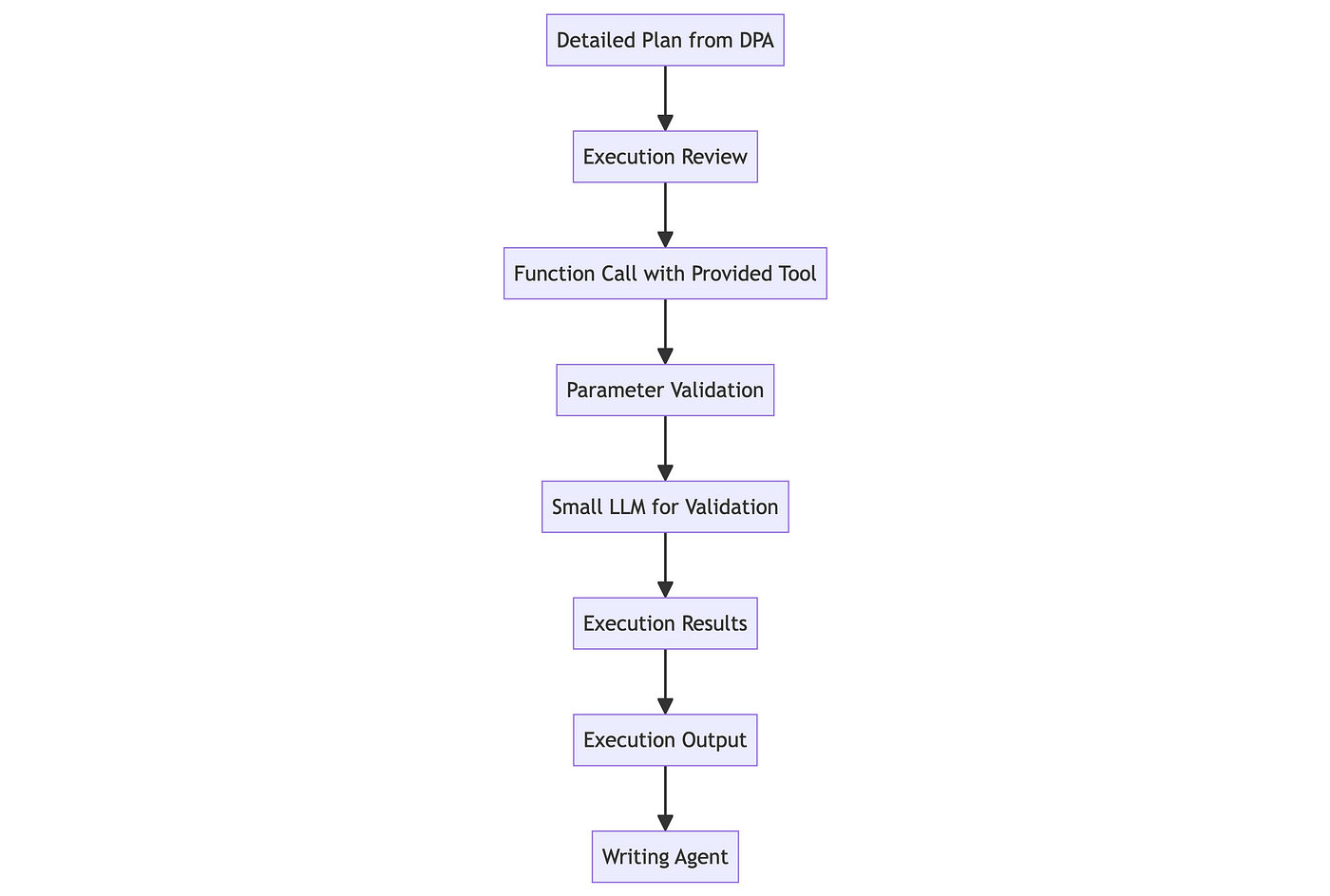

The Action Agent (AA) processes the JSON output from the Detailed Planning Agent (DPA) in a step-by-step sequence. For each step, the AA calls the specified tool using the parameters provided by the DPA and validates/adjusts them based on instructions embedded within the function call. The AA also have temporal memory, so it is able to reference parameters from previous steps, such as using a country ID retrieved earlier to look up flights. This approach helps ensure that each step is executed precisely and efficiently, closely following the detailed plan set by the DPA.

- Function: Sequentially executes each step of the detailed plan.

- Implementation: Utilises simple function calls with predefined tools and parameters. Each function call is provided only one tool, which anecdotally seems to speed things up and reduce variability.

- Safety Feature: Incorporates a small LLM (ChatGPT3.5/4o-mini etc) for parameter validation and handling edge cases.

Action Agent Workflow: From Detailed Plan to Execution. Image by author.

Action Agent Workflow: From Detailed Plan to Execution. Image by author.

Example Execution:

{ "step": "Flight Booking", "execution": "FlightSearchAPI.search_flights(departure='London', destination='Paris', date='2024-08-01', class='Economy')"}

Rationale: This structured approach limits guesswork and can, therefore, bring in a number of errors. Also, embedding a small LLM with function calls for parameter validation and handling edge cases adds robustness without having significant detriments to performance enhancement.

4. Writing Agent (WA)

Finally, the Writing Agent (WA) synthesises the outputs from each completed step into a response. The WA draws on information from each step and follows instructions from its prompt to output the desired narrative in relation to the user's query.

- Function: Synthesises the final response by compiling information from all executed steps.

- Input: Array of responses generated by the Action Agent.

- Implementation: The choice of model depends on the number of responses expected per agent request and the desired presentation style. For simple tasks, use the smallest effective model; for more complex tasks that need to consider lots of data, for example, a larger foundation model will be necessary.

- Output: Depending on your solution needs, the Writing Agent can generate concise summaries, detailed narratives, or structured data outputs, ensuring the final result is coherent and useful.

Example Execution:

Input:[ {"step": "Flight Booking", "result": "Flight from London to Paris booked for 2024-08-01." }, {"step": "Accommodation Booking", "result": "Hotel in Paris reserved from 2024-08-01 to 2024-08-07." }, {"step": "Itinerary Planning", "result": "Itinerary includes visits to the Louvre, Eiffel Tower, and a Seine River cruise." }, {"step": "Transportation Arrangements", "result": "Metro and taxi arrangements confirmed for local travel."}, {"step": "Packing List", "result": "Packing list generated: summer clothes, comfortable shoes, travel documents, and camera."}]

Output:Your trip to Paris is all set! Your flight from London to Paris is booked for 2024-08-01. You will be staying in a hotel from 2024-08-01 to 2024-08-07. The itinerary includes visits to the Louvre, the Eiffel Tower, and a Seine River cruise. Local transportation via metro and taxi has been arranged. Don't forget to pack summer clothes, comfortable shoes, travel documents, and a camera. Enjoy your trip!

Rationale: The Writing Agent ensures that the final output is not just a collection of individual responses but a comprehensive narrative that fully addresses the user's original query based on the information provided.

Why SeqRAG Works in Production Environments

SeqRAG's design aims to make it well-suited for the rigours of a production environment based on the following key features:

- Speed: By structuring tasks sequentially and reducing computational overhead, SeqRAG's approach is designed for production environments where consistency and speed are key.

- Reliability: The structured, step-by-step approach reduces variability and makes outcomes more predictable — an essential quality for production environments.

- Debuggability (that's a word, right?): In case things go wrong, finding and fixing the problems is much easier with SeqRAG's linear process. Issues can be identified as early as the first step without having to wait for the whole plan to be executed.

- Understandability: SeqRAG adopts a sequential way of doing things, which, in our experience, has helped make it more understandable for the developers implementing it. While other models involve the interaction of agents, each performing some part of the task, adding considerable complexity and unpredictability to the model, SeqRAG ensures clarity and, hence, easiness in handling. It's easy to test each agent independently for a variety of scenarios.

- Less Computational Overhead: SeqRAG reduces computation overhead by avoiding continuous replanning.

- Flexibility: In the modular SeqRAG architecture, these components can easily be swapped or upgraded as better technology becomes available.

Limitations of SeqRAG

Although SeqRAG has a set of advantages, it also has limitations that impact its effectiveness in certain scenarios:

- Initial Plan Errors: If the plan is wrong at the beginning, there is no method for SeqRAG to automatically correct it. Future research could be spent adding validation steps or heuristic checks, which would catch such errors more quickly than later. However, this weakness could also be seen as a strength as it makes it easy to identify whether or not an execution of SeqRAG is likely to be successful.

- Limited Adaptability: It is less able to handle unexpected information or changes than more adaptive approaches.

- Dependency on Initial Planning Quality: It is, to a certain degree, dependent upon the quality of the initial HLPA plan.

- Risk of Overspecialization: It may be less versatile for general-purpose problem-solving than more flexible approaches.

Areas for Further Research and Optimisation

- Dynamic Model Selection: An adaptive model selection tool based on query complexity chooses bigger models for complex plans and smaller ones for simpler operations, finding a balance between relevance and speed.

- Parallelisation: For example, creating more than one top-level plan in parallel and then selecting the best out of them. Also, the steps in the detailed plan, which are independent of each other, could be performed parallel to save time.

- Feedback Loops: Studying the possibility of using execution feedback in the planning phases, but this is too slow now and introduces too much non-determinism, hence making debugging burdensome.

- Caching and Transfer Learning: The effective storing and reusing of common plan elements when similar queries are executed could bring many performance benefits. As could improvements in prompt caching.

- Plan Validation: The introduction of a validation step for high-level and detailed plans may increase its reliability. It could be performed separately, either by an agent or through heuristic regex checks, to ensure that the plan is feasible to execute and complete.

The Evolution of AI Planning and SeqRAG's Place Within It

The broader advancements in AI-assisted planning have informed SeqRAG's development and are worth being aware of.

Frameworks like PlanRAG, LLM-DP, LLM+P, ReAct/Reflexion, and Microsoft's AutoGen each represent distinct approaches to complex task management, serving as both inspiration and points of differentiation for SeqRAG.

PlanRAG emphasizes adaptive retrieval-based planning, adjusting dynamically through each stage, whereas SeqRAG opts for upfront planning to prioritize speed and consistency. Similarly, LLM-DP combines LLMs with symbolic planners for tasks that involve physical interactions, diverging from SeqRAG's focus on structured information retrieval. The LLM+P and ReAct/Reflexion approaches add layers of adaptability and reflection, handling unpredictable environments, while SeqRAG's design focuses on minimizing variability.

Finally, AutoGen really exemplifies flexible, multi-agent workflows adaptable to diverse applications and served as the initial inspiration for SeqRAG's specialised agent roles.

SeqRAG's purpose is not to replace these varied frameworks but to serve as an efficient, reliable option for scenarios that benefit from a structured, step-by-step approach — making it hopefully suitable for predictable, multi-step tasks in production environments.

SeqRAG provides one approach to handling complex, plan-based queries, focusing on efficiency, consistency, and specialisation through sequential steps. We hope this framework offers a starting point for further experimentation in developing reliable, production-ready AI agents.

Dive into our GitHub repo, get your hands dirty with the code, and join the Agent Revolution.

Happy agent building, everyone!

Special thanks to Henry Cleland, Erik Schwartz, Colin Ke Han Zhang. We worked together for many years on the ideas that eventually became SeqRAG, and they deserve equal credit if anything above is useful. Thank you guys!

Disclosure of Affiliation

As one of the creators of SeqRAG, developed in collaboration with my team at Elsevier, I am excited to share our approach to making complex AI workflows more accessible. This project is our way of contributing to the broader AI community, which has inspired our work. It offers a solution we hope others will find both practical and educationally useful.